The Global Age of Algorithm: Social Credit and the Financialisation of Governance in China

Beautiful credit! The foundation of modern society. Who shall say that this is not the golden age of mutual trust, of unlimited reliance upon human promises?

(Mark Twain and Charles Dudley Warner, 1873, The Gilded Age: A Tale of Today)

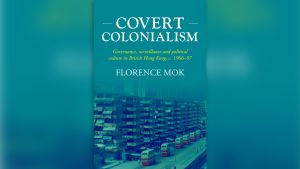

In recent years, few news items out of China have resulted in as much anxiety and fear in western media and public discourse than the Chinese government’s on-going attempts to create a ‘social credit system’ (shehui xinyong tixi) aimed at rating the trustworthiness of individuals and companies. Most major western media outlets have spent significant energy warning about China’s efforts to create an Orwellian dystopia. The most hyperbolic of these—The Economist—has even run with menacing headlines like ‘China Invents the Digital Totalitarian State’ and ‘China’s Digital Dictatorship’ (The Economist 2016b; The Economist 2016c). These articles both implicitly and explicitly depict social credit as something unique to China—a nefarious and perverse digital innovation that could only be conceived of and carried out by a regime like the Chinese Communist Party (Daum 2017).

Social credit is thus seen as signalling the onset of a dystopian future that could only exist in the Chinese context. But how unique to China is this attempt to ‘build an environment of trust’—to quote the State Council—using new digital forms of data collection and analysis (General Office of the State Council 2016)? Is this Orwellian social credit system indicative of an inherently Chinese form of digital life, or is it a dark manifestation of our collective impulses to increase transparency and accountability (at the expense of privacy), and to integrate everyone into a single ‘inclusive’ system to more easily categorise, monitor, and standardise social activity? In this essay we propose that Chinese social credit should not be exoticised or viewed in isolation. Rather, it must be understood as merely one manifestation of the global age of the algorithm.

Engineering a Trustworthy Society

While there have long been discussions about creating an economic and social rating system in China, they took a much more concrete form in 2014, with the publication of a high-level policy document outlining plans to create a nationwide social credit system by 2020 (State Council 2014). The proposed system will assign ratings to individuals, organisations, and businesses that draw on big data generated from economic, social, and commercial behaviour. The stated aim is to ‘provide the trustworthy with benefits and discipline the untrustworthy… [so that] integrity becomes a widespread social value’ (General Office of the State Council 2016). While official policy documents are light on detail with regard to how the social credit system will ultimately operate, they have suggested various ways to punish untrustworthy members of society (i.e. those with low ratings), such as through restrictions on employment, consumption, travel, and access to credit. In recent months, there have already been reports of blacklisting resulting in restrictions for individuals, but as of yet this only applies to those who have broken specific laws or ‘failed to perform certain legal obligations’ (Daum 2018a).

The Chinese social credit system is emerging rapidly, and the aforementioned blacklists are connecting data from dozens of governmental departments. However, it is still far from being a unified or centralised system. Like most new policies in China, social credit is being subjected to the country’s distinctive policy modelling process (Heilmann 2008), where local governments produce their own interpretations of policies, which then vie to become national models. Over 30 local governments have already started piloting social credit systems, which utilise different approaches to arrive at their social credit scores, and which use the scores to achieve different outcomes. In contrast to other policies, however, large Internet companies have also been given licenses to run their own pilots (Loubere 2017a). The most widely used private social credit system is Alibaba’s Sesame Credit, which utilises opaque algorithms to arrive at social credit scores for their customers. Those with high scores have been able to access a range of benefits from other Alibaba businesses and their partners (Bislev 2017). Sesame Credit is significant due to the huge amounts of economic data held by Alibaba through Alipay and Ant Financial, but it should not be conflated with governmental social credit system pilots. It is not clear how or if the government and private systems will be integrated in the future, which seems to be causing a degree of tension between regulators and the Internet giants (Hornby, Ju, and Lucas 2018).

Financial(ised) Inclusion

While social credit can be seen as an outgrowth of our collective impulse to achieve a more trustworthy society, a unified fully-functioning social credit system will ultimately turn the quest for trust through transparency and accountability upside down, because it would hold citizens responsible vis-à-vis their rulers. At the core of the emerging system, the State and financial actors define, quantify, and calculate trustworthiness and honesty—it is a technocratic fix based on the logic that, with the correct set of algorithms, the good citizen can be engineered into society. Social credit therefore seeks to transform individuals into a new ‘civilised’ (and ‘credit conscious’) population through the imposition of an incentive and disincentive system that can mould logical profit-maximising citizens into civilised subjects.

In the case of China’s proposed social credit system—as with any credit rating system—these rewards and punishments are meted out through engagement with, and incorporation into, the market. The calculation of credit scores requires market activity, which in turn requires a credit score. Moreover, if social credit is to live up to its technocratic promise of systematically eliminating untrustworthiness, everyone must be assessed equally—i.e. everyone must be included in the system. In the absence of a social credit score the worst must be assumed, meaning that the burden of proving ones trustworthiness falls to the individual. Thus, in a society dominated by social credit, integration into the socioeconomic system is a necessity rather than a choice. In this way China’s social credit resonates with the global financial inclusion project, which seeks to integrate marginal and impoverished populations into the global capitalist system—primarily through expanded access to credit—as a means of promoting economic development and social empowerment.

In the same way that Chinese social credit appears poised to extract huge amounts of personal data from individuals in its quest to create a trustworthy society, proponents of financial inclusion justify intrusive methods of assessing creditworthiness in order to reduce lender risk from untrustworthy borrowers. Indeed, just months before their hyperbolic headlines about China’s digital authoritarianism, The Economist praised the use of psychometrics and other personal digital data by lenders in developing contexts as being a beneficial financial innovation (The Economist 2016a). In this way, the financial inclusion project depicts the application of financialised logics as the means of producing a more fair and accountable inclusive system, where the trustworthy reap rewards that were denied them in the past. However, underpinning this neoliberal fantasy is a glaring contradiction that shatters the illusion of inclusion as being unbiased and fair—those with capital are able to set the terms of their engagement with the capitalist system much more easily than those without.

This points to the fact that the rich will largely be able to extract more of the rewards from their participation in financialised rating systems—such as the social credit system—while avoiding the sanctions. Moreover, punishments are much more dramatic for those without accumulated capital, as their very existence depends on their continued participation in the capitalist system for daily survival. From this perspective, the spectre of China’s financialised social credit system portents a society comprising individual micro-entrepreneurs operating in a shared economy mode where livelihoods are determined by credit scores. Indeed, Sesame Credit already works with sharing economy apps, such as Daowei, which provides a platform for a literal gig economy comprised of individuals (with their credit scores listed) advertising the sale of their services or products (Loubere 2017b). Those looking for a plumber in the area can select one with the highest score, just as people in the west decide hotels and restaurants based on Yelp or TripAdvisor reviews.

Financialisation Gone Wild

In this sense, the emergence of social credit represents an unprecedented climax of the global financialsation project. Financialisation can be broadly defined as ‘the increasing role of financial motives, financial markets, financial actors, and financial institutions in the operation of domestic and international economy’ (Epstein 2005, 3). Social credit opens the door for financialising social behaviour. To elaborate this claim, consider the relation between social and financial capital. The OECD, for example, defines social capital as ‘networks together with shared norms, values and understandings that facilitate co-operation within or among groups’ (Keeley 2007, 103). In the digital age these networks become the linchpins between the social and economic spheres. On the one hand networks are more concrete and easier to observe than the norms and values shaping the perceptions and behaviour of network members. On the other hand, social networks represent a crucial means for both gaining access to material resources and shaping the rules for resource distribution. Thus, analysing and contextualising social networks in a big-data driven world allow for inferences to be made about both the social and economic attributes of an individual.

The invention of social credit establishes an explicit and tangible link between social behaviour and economic benefits. In this context the State is able to assume a new role not dissimilar to that of a corporate shareholder. Social credit creates a market for social capital and transplants the rationale of profit maximisation into the realm of interpersonal relationships. Through managing networks, digital activity, and private action, an individual or organisation can impact social value and, by extension, financial capital. Thus, social credit creates new incentives that can be used to align the interests of citizens and organisations with those of the Government. The state, as a shareholder in ‘the people’, enjoys the dividends of good behaviour and loyalty, which is rewarded through economic privileges. In this all-encompassing financialised system, social action becomes increasingly entrenched within the economic realm, and individual behaviour is more and more shaped by financial motives. In a nutshell, social credit represents the ultimate marketisation of political control because it provides incentives for maximising citizen value through politically and commercially aligned social behaviour.

Using algorithms to render citizens and organisations compliant with the visions and rationales of the ruling regime reduces the State’s information and monitoring costs dramatically. In the context of China, this has the potential to reshape the ‘fragmented authoritarian’ model, which is characterised by decentralised decision-making and policy implementation (Mertha 2009). One could envision a future in which the many local officials and bureaucrats that enjoy privileges due to the Central leadership’s reliance on their support to govern the masses will be subject to the rule of algorithms themselves. Only a small elite would be needed to manage algorithmic rule, entailing a dramatic re-concentration of power. If Chinese experiments are successful, they will certainly serve as a model for many other countries; authoritarian regimes, democratic systems with authoritarian tendencies, and eventually democracies that struggle to maintain legitimacy in an increasingly polarised and fragmented political landscape.

The Repressive Logics of Financialised Governance

As noted above, despite the discourse of inclusion resulting in transparency and fairer distribution or resources, social credit and the financialisation of social behaviour are inherently biased and paradoxically result in socioeconomic exclusion within an all-encompassing inclusive system. In addition to being partial to those with capital, social credit will likely also widen other socioeconomic cleavages. Tests and experiments again and again confirm that data and algorithms are just as biased as society is and inevitably reproduce real life segmentation and inequality (Bodkin 2017). Cathy O’Neil, the author of Weapons of Math Destruction, for instance, warns that we need algorithmic audits (O’Neil 2016). After all, algorithms are not some naturally occurring phenomena, but are the reflections of the people (and societies) that create them. For this reason, the rule of algorithms must not be mistaken as an upgraded, more rational, and hyper-scientific rule of law 2.0. This is particularly true in China, where the concept of the rule of law has been increasingly developed and theorised by the Party-state to justify its attempts to consolidate control over society (Rosenzweig, Smith, and Treveskes 2017).

In recent years China has already been providing glimpses of the repressive possibilities of algorithmic rule. In particular, recent reports about the construction of a sophisticated high-tech surveillance state in the Xinjiang Autonomous Region anticipate a near future where a digital social credit system sits the core of a coercive security apparatus that is inherently biased against certain segments of society—producing dramatically inequitable and ultimately violent results (Human Rights Watch 2018). In an op-ed for the New York Times, James A. Millward describes the extent of the surveillance infrastructure primarily targeting the Uyghur ethnic minority. This includes police checkpoints, iris scans, mandatory spyware installed on mobile devises, and pervasive CCTV with facial recognition software. These surveillance technologies feed into, and draw on, a database that includes information about personal identity, family and friends, movement and shopping behaviour, and even DNA that is collected at medical check-ups organised by the government. Ultimately, these data are run through algorithms that assign residents with public safety scores deeming them ‘safe’, ‘unsafe’, or somewhere in between (Millward 2018). Those who are deemed to be a threat are often detained and sent to re-education centres (Foreign Policy 2018). While this is not the government’s proposed social credit system per se—as these types of data are not legally allowed to be collected for public or market information (Daum 2018b)—the logics underpinning this type of coercive surveillance infrastructure and the dreams a nationwide citizen rating system, are the same.

These developments represent a new reality that, while shocking initially, has become a banal part of everyday existence in a few short years. It is becoming increasingly clear that Xinjiang is a testing ground for technologies and techniques that will be rolled out nationwide—and even beyond—in the near future. For instance, over the spring festival period railway police in Henan used glasses augmented with facial recognition software connected to a centralised database to identify suspected criminals (Wade 2018). China’s massive surveillance market is also a global affair, with companies from around the world lining up to develop products for both the Chinese State and private businesses operating in the country (Strumpf and Fan 2017). This points to the fact that China is not developing its surveillance capabilities in isolation but is at the forefront of a global push towards increasingly centralised and interconnected surveillance apparatuses. Rating systems like the proposed social credit system will inevitably sit at the centre of surveillance regimes, providing the basis for how individuals and organisations are monitored and assessed, and what they are able to do (and not do) within society.

Our Dark Digital Futures

China’s proposed social credit system and the on-going construction of a surveillance state in Xinjiang represent the vanguard of more efficient means of socioeconomic control that are being taken up around the globe. They are dark outgrowths of the digital revolution’s supposed ‘liberation technologies’—underpinned by our very human compulsions for transparency, security, and fairness. Credit systems are, of course, not new, nor are they Chinese in origin. Most industrialised nations have been relying on credit ratings for a long time in order to quantify the financial risk of countries, firms, and individuals (Yu et al. 2015). Indeed, some of the most disturbing aspects of Chinese social credit, such as its integration into social media, are not uniquely or originally Chinese. In the US, Affirm, a San Francisco-based lender headed by PayPal co-founder Max Levchin, has been experimenting with social media data to evaluate the credit risk of car buyers since 2013. And Lenddo, a Hong Kong-based company, took an even bolder approach and informed debtors’ friends on Facebook when they didn’t pay instalments in time. Even the Orwellian nightmare unfolding in Xinjiang has its parallels elsewhere, such as with the recent revelations that in the US, the New Orleans Police Department and Immigration and Customs Enforcement (ICE) have been working with Peter Thiel’s company Palantir Technologies (which also has connections with the CIA and the Pentagon) to experiment with ‘predictive policing’ based on data collected from police databases, social media, and elsewhere (Fang 2018; Winston 2018). Taken together, these developments reveal a vision of a digital future where we are all locked in a continuous and banal system of monitoring, accounting, categorising, and tracking—which has potential far reaching consequences for those who challenge the hegemony in any way, or even just for those who do not have the resources or capacity to participate in the socioeconomic system on the terms mandated.

Big-data driven social benchmarking sparks entrepreneurs’ and politicians’ imaginations about the opportunities lying ahead. And even though not all visions will be economically or politically viable at any place in the world, the general trend appears to be global and irreversible. Social credit and the dreams of financialised governance are not Chinese or authoritarian particularities, but are, perhaps, our ‘shared destiny’ (gongtong mingyun)—to use a term employed by Xi Jinping when talking about the Chinese vision for the future of humanity (Barmé, Jaivin, and Goldkorn 2014). This, however, doesn’t make it less, but rather more worrisome. The logical conclusion of society-wide financialisation is the blurring of the border between political and commercial realms, and the sharpening of the repressive tools wielded by the rich and powerful. In China this scenario appears to be inevitable. To quote Lucy Peng, the chief executive of Ant financial, ‘[Sesame Credit] will ensure that the bad people in society don’t have a place to go, while good people can move freely and without obstruction’ (Hvistendahl 2017).